What gaslighting means and why it’s hard to prove

Gaslighting isn’t just “someone disagreeing with you.” It’s a manipulation pattern where a person tries to make you doubt your memory, perception, or understanding of events – so they can gain control, dodge accountability, or keep you off-balance. That’s close to how the American Psychological Association defines gaslighting: manipulating someone into doubting their perceptions or experiences.

The reason gaslighting is so destabilizing is that it often works in small doses:

- “That never happened.”

- “You’re too sensitive.”

- “I never said that – you must be confused.”

- “Everyone thinks you’re overreacting.”

- “Sorry you feel that way”

One comment can be brushed off. But repeated over time, the pattern can make you second-guess your own reality. And once your confidence is shaken, it becomes easier for the manipulator to rewrite what’s “true.”

Gaslighting also gets messy because normal conflict exists. Couples misremember details. Coworkers interpret tone differently. Families argue. The difference is usually the pattern + power dynamic + intent: a consistent push to invalidate your reality, while refusing repair, evidence, or accountability.

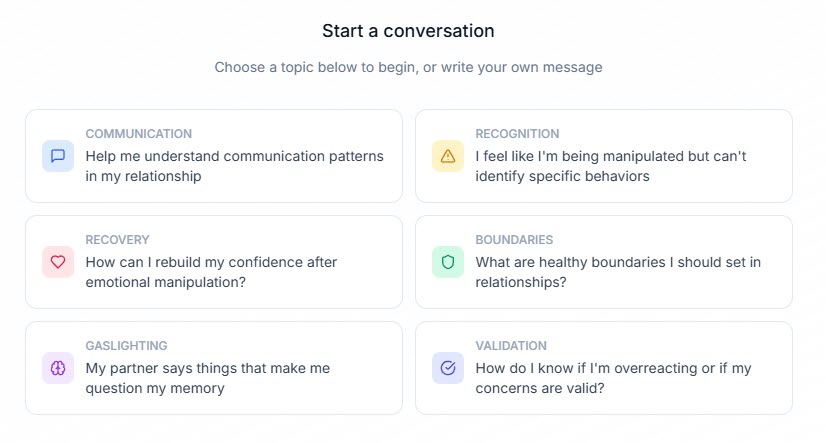

What gaslightingcheck.com claims to do with AI

gaslightingcheck.com positions itself as an “AI-powered” tool that analyzes text and audio to identify potential gaslighting/manipulation patterns, then returns a report (with premium features like history and detailed insights).

Its Privacy Policy says submitted text/audio are processed and that it uses OpenAI API for transcription and text analysis, with Supabase for storage/authentication and Stripe for payments.

Its Terms also emphasize an important limitation: results are “subjective assessments,” not a professional diagnosis.

So what’s the real promise here? Not “this proves abuse in court.” More like:

“This might help you spot patterns you’re too stressed to name in the moment.”

What AI can and can’t detect in real life

AI can be useful for certain things:

- Pattern-spotting in language: repeated denial, blame-shifting, emotional invalidation, “everyone agrees with me,” etc.

- Documentation support: organizing examples over time can reduce that “maybe I’m imagining it?” spiral.

- Reflection prompts: a report can push you to ask better questions: Was that a one-off, or does it repeat?

But AI also has serious blind spots:

- Context is everything. A single sentence can be playful banter, cultural communication style, neurodivergent directness, or cruelty. AI can’t reliably know which.

- Missing history. Gaslighting is often about the relationship pattern, not a single quote.

- False confidence. A “high severity” score might make you feel certain when reality is nuanced – or make you feel worse when you’re already fragile.

This is a broader issue with automated language judgments: even strong systems can struggle with ambiguity and make mistakes, especially when the stakes are high.

A good mental model is: AI can help you notice. It can’t decide for you.

Privacy, security, and consent are non-negotiable

If you’re analyzing sensitive conversations, privacy isn’t a “nice to have.” It’s the entire game.

Gaslighting Check’s Privacy Policy says it may store your submitted content (text/audio), analysis results, and usage data; it also describes tier-based retention (example: 7 days free, 30 days Plus, “indefinitely” Pro) and lists its third-party processors (OpenAI, Supabase, Stripe, Google auth). Gaslighting Check

Before using any tool like this, do a quick safety checklist:

- Consent: Are you uploading someone else’s words/voice without permission? That can be ethically and legally risky.

- Minimize identifiers: Don’t upload full names, addresses, or anything you wouldn’t want exposed.

- Retention: “Deleted after X days” can still mean backups or logs exist for a period (and policies can change).

- Jurisdiction: Their policy notes international processing (including the U.S.). Gaslighting Check

If you’re in a high-risk situation (controlling partner, workplace retaliation, custody conflict), assume anything you upload could someday be discovered – even if the company is acting in good faith.

How to use an AI check without losing your reality

If you try an AI gaslighting detector, here’s a safer way to use it:

- Capture (lightly)

- Save only the specific snippet you’re worried about.

- Remove identifying details.

- Add a one-line note: What happened right before/after? How did I feel?

- Reflect (human-first)

Ask:

- Is this a one-off or a repeated pattern?

- Do I feel confused after interactions with this person – often?

- Do they repair harm when it’s pointed out, or do they attack my character?

- Corroborate

- Check with a trusted friend, therapist, or mentor (someone who won’t dismiss you).

- Compare your notes over time.

- Decide your next move

AI output should support action like:

- Setting boundaries

- Changing communication channels (more written, less verbal)

- Seeking HR/management support (workplace)

- Creating distance and safety planning (relationships)

When to skip the tool and get human help

Use tools as support – not substitutes – especially if you notice:

- Fear of retaliation or escalation

- Isolation from friends/family

- Threats, stalking, coercion, or financial control

- You’re feeling “crazy,” panicky, or unsafe

Gaslighting can be linked with negative mental health outcomes, and if you’re deteriorating, you deserve real support – not just analysis. (If you’re in immediate danger, contact local emergency services or a local hotline.)

0 Comments